Azure AI Document Intelligence: Processing an Invoice and Saving it in Azure SQL Server Database using Azure Functions

In this article, we will understand the Azure AI Document Intelligence Service to process the invoice. We will implement the document processing using Azure AI, Azure Function, and Blob Storage. The following figure shows the complete implementation.

Azure AI Services

Microsoft Azure AI is a comprehensive suite for Artificial Intelligence services and tools provided by Microsoft Azure. This service helps organizations and developers to build, deploy, and manage AI solutions at scale. Azure AI is designed to be scalable and flexible that make it suitable for designing and developing wide range of applications from simple chatbot to large scale complex enterprise application like Healthcare, Logistic, Data Science, etc. For more information on Azure AI please visit to this link.

Azure AI Document Intelligence

Azure AI Document Intelligence is a powerful service that uses advanced machine learning to extract text, key-value pairs, tables, and structures from documents automatically and accurately. Azure AI Document Intelligence can be used to automate document processing workflows, enhance data-driven decision-making, and improve operational efficiency. The Azure AI Document Intelligence has capabilities as follows:

- Prebuilt Models, they are used to process common documents like receipts, invoices, business cards, etc. These models are already available and without training we can use them.

- Text Extraction, this is used to extract text from documents quickly and accurately, this includes extraction of text not only printed but handwritten as well using optical character recognition (OCR) technology.

- Key-Value Pair Extraction, this identifies and extracts key-value pairs from forms and documents, making it easier to organize and analyze data

- We can even define Custom Models as per the business needs.

- The Document Intelligence offers Flexible Deployment for On-premises and Cloud environment and Enterprise-Grade Security to protect data and models.

- Financial Services and Legal

- US Tax

- US Mortgage

- Personal Identification

- Provide one clear photo or high-quality scan per document

- For PDF and TIFF, up to 2,000 pages can be processed

- The file size for analyzing documents is 500 MB for paid (S0) tier and 4 MB for free (F0) tier

- Image dimensions must be between 50 pixels x 50 pixels and 10,000 pixels x 10,000 pixels.

- If the PDF is password protected, then please remove it before submission

- The minimum height of the text to be extracted from the document is 12 pixels for a 1024 x 768 pixel image. This dimension corresponds to about 8 point text at 150 dots per inch (DPI).

- When using custom model training, the maximum number of pages for training data is 500 for the custom template model and 50,000 for the custom neural model.

- For the custom extraction model training, the total size of training data is 50 MB for template model and 1 GB for the neural model.

- For custom classification model training, the total size of training data is 1 GB with a maximum of 10,000 pages. For 2024-07-31-preview and later, the total size of training data is 2 GB with a maximum of 10,000 pages.

- Free F0, this offers all type of documents from 0 to 500 pages. 20 call per minute for recognizer API and 1 call per minute for training API

- S0, this offers 1 call per minute for training APT. This supports 0 to 1 million pages with pricing as $1.50 per 1000 pages (as of now)

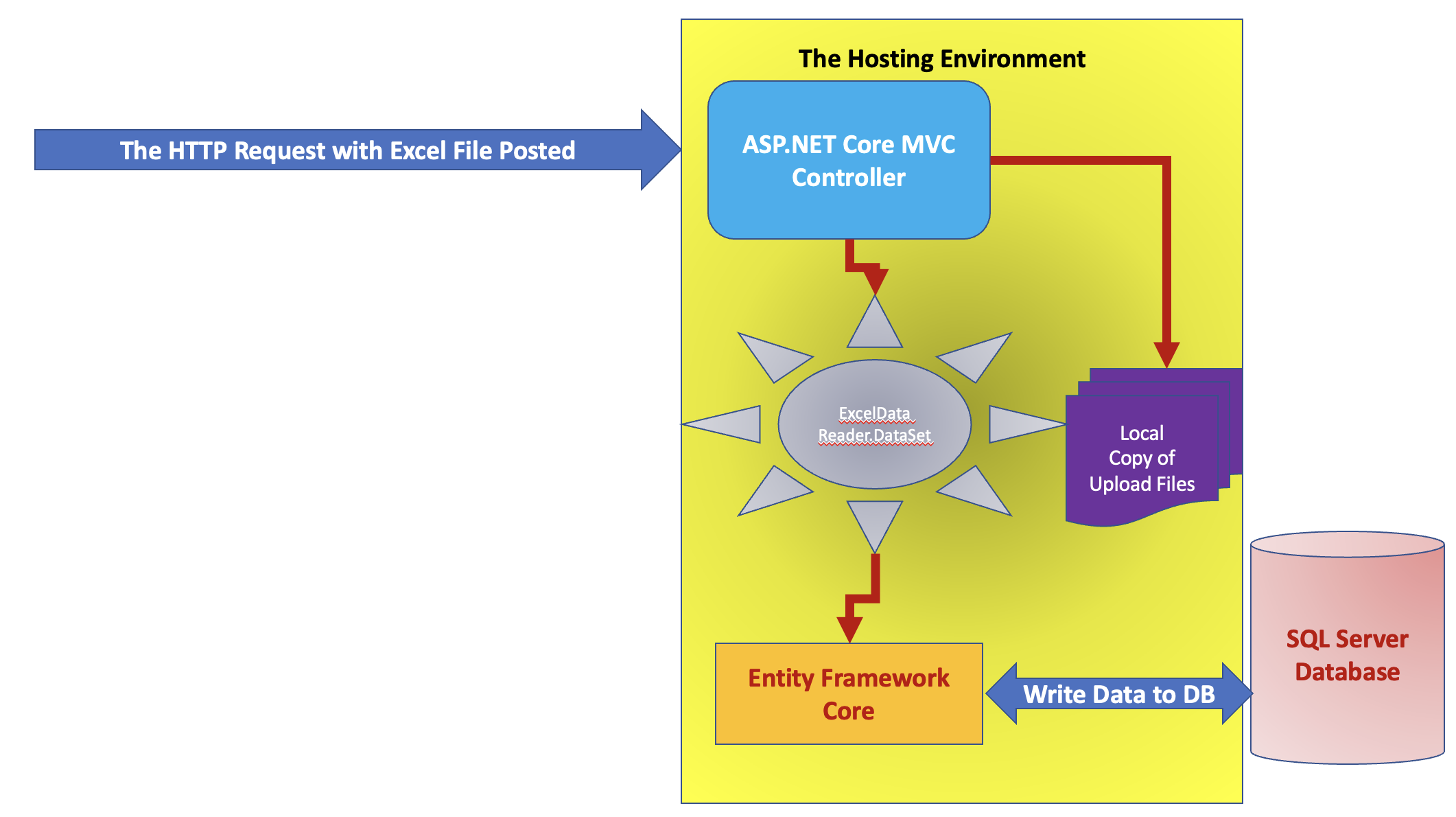

The complete process of the execution is shown in Figure 10.

Figure 10: Teh Application Structure

Creating Client Application

Open Visual Studio 2022 and create a new Azure Function Project targeted to .NET 8 named Az_Fn_HttpTrigger_FileUpload. Make sure that the HttpTrigger is selected. The Project creation is shown in Figure 11.

Figure 11: The Project creation with HttpTrigger

Once the project is created, modify the local.stettings.json file. In this file we will define our keys and connection strings for Azure AI Document Intelligence, Storage Account, and Azure SQL database which we have copied earlier in this article. The Listing 2 shows the local.settings.json file

{

"IsEncrypted": false,

"Values": {

"AzureWebJobsStorage": "[Azure-Web-Storage-Connection-String]",

"FUNCTIONS_WORKER_RUNTIME": "dotnet-isolated",

"AzureAIServiceEndpoint": "[Azure-AI-Document-Intelligence-Endpoint]",

"AzureAIServiceKey": "[Azure-AI-Document-Intelligence-Key]",

"DatabaseConnectionString": "[Database-Connection-String]"

}

}

Listing 2: The Settings file

To access the Azure AI Document Intelligence service, Azure SQL and Azure Storage blobs we need add following packages in the project.

- Azure.AI.FormRecognizer

- Azure.AI.FormRecognizer, is part of Azure Document Intelligence and it is a cloud-based service that uses machine learning to analyze and extract information from documents. It has following major features

- Layout Extraction

- Document Analysis

- Prebuilt Models

- Custom Models

- Classification

- Azure.Storage.Blobs

- Provides classes to access Azure Blob Storage to perform operations like Upload, Read, Download, etc.

- Microsoft.Data.SqlClient

- This is package used to connect with SQL Server database

using Azure;

using Azure.AI.FormRecognizer.DocumentAnalysis;

using Azure.Storage.Blobs;

using Microsoft.Azure.Functions.Worker;

using Microsoft.Extensions.Configuration;

using Microsoft.Extensions.DependencyInjection;

using Microsoft.Extensions.Hosting;

var host = new HostBuilder()

.ConfigureFunctionsWebApplication()

.ConfigureAppConfiguration(config =>

{

config.AddJsonFile("local.settings.json", optional: true, reloadOnChange: true);

})

.ConfigureServices((context,services) =>

{

services.AddApplicationInsightsTelemetryWorkerService();

services.ConfigureFunctionsApplicationInsights();

services.AddSingleton(x => new BlobServiceClient(context.Configuration["Values:AzureWebJobsStorage"]));

services.AddSingleton(x=> new DocumentAnalysisClient(new Uri(context.Configuration["Values:AzureAIServiceEndpoint"]),

new AzureKeyCredential(context.Configuration["Values:AzureAIServiceKey"])));

})

.Build();

host.Run();

The code in Listing 3 shows that the local.settings.json file is read using the IConfigurationBuilder interface using its AddJsonFile() method. The Azure Blob service client is created using the BlobServiceClient class. This class is used to authenticate the current application to Azure Blob Storage using the connection string so that the client application can perform operations like upload, download Blobs to and from Azure Blob Container.

The DocumentAnalysisClient class is part of the Azure Form Recognizer service, which is used to analyze and extract information from documents and images. This client supports various document types, including receipts, business cards, invoices, and identity documents, etc.

The AzureKeyCredentials class is used to authenticate and authorize requests to Azure services using a static key. This is particularly useful for scenarios where you need to authenticate using a key rather than other methods like OAuth tokens.

In the project, rename Function1.cs to Uploadblob.cs. In this function we will inject the BlobServiceClient class so that we can access Azure Blob Storage. Since this function will be triggered with Http request, the Run() method will accept Http Post request, and the file sent in the Http request will be read. The code verifies that the file has the '.pdf' extension. Further, this file will be uploaded to Azure Blob Storage in invoices container. Listing 4, shows the complete code.

using Azure.Storage.Blobs;

using Microsoft.AspNetCore.Http;

using Microsoft.AspNetCore.Mvc;

using Microsoft.Azure.Functions.Worker;

using Microsoft.Extensions.Logging;

namespace Az_Fn_HttpTrigger_FileUpload

{

public class UploadBlob

{

private readonly ILogger<UploadBlob> _logger;

private readonly BlobServiceClient _blobServiceClient;

public UploadBlob(ILogger<UploadBlob> logger, BlobServiceClient blobServiceClient)

{

_logger = logger;

_blobServiceClient = blobServiceClient;

}

[Function("upload")]

public async Task<IActionResult> Run([HttpTrigger(AuthorizationLevel.Function, "post")] HttpRequest req)

{

_logger.LogInformation("C# HTTP trigger function processed a request.");

if (!req.HasFormContentType || !req.Form.Files.Any())

{

return new BadRequestObjectResult("No file uploaded.");

}

// 1. Read the File from the request

var file = req.Form.Files[0];

if (!CheckFileExtension(file))

{

return new BadRequestObjectResult("Invalid file extension. Only PDF files are allowed.");

}

// 2. Get the Blob Container

var containerClient = _blobServiceClient.GetBlobContainerClient("invoices");

// 3. Create the Blob Container if it doesn't exist

await containerClient.CreateIfNotExistsAsync();

var blobClient = containerClient.GetBlobClient(file.FileName);

using (var stream = file.OpenReadStream())

{

await blobClient.UploadAsync(stream, true);

}

return new OkObjectResult($"File {file.FileName} uploaded successfully.");

}

private bool CheckFileExtension(IFormFile file)

{

string[] extensions = new string[] { "pdf" };

var fileNameExtension = file.FileName.Split(".")[1];

if (string.IsNullOrEmpty(fileNameExtension) ||

!extensions.Contains(fileNameExtension))

{

return false;

}

return true;

}

}

}

Listing 4: The File Upload Function on HttpTrigger

Since we will be using the prebuilt model, the format of the invoice that we will be using as shown in Figure 12.

Figure 12: The Invoice format

Note that, we can create a Custom Model using the Document Intelligence Studio. I am not covering that process in this article. In the next article I will be demonstrating it.

To process the Invoice document, using the Azure AI Document Intelligence service we will add a new Azure Function that will be executed when the Invoice is uploaded in the Blob Storage. We will add this function in the current project itself. We will name this function as InvoiceProcessor. Figure 13 shows the newly added function.

Figure 13: Blob Trigger Function to Process the invoice

In this function we will use AnalyzeDocumentOperation, AnalyzeResult classes.

The AnalyzeDocumentOperation class in the Azure.AI.FormRecognizer.DocumentAnalysis namespace is part of the Azure SDK for .NET. This class is used to track the status of a long-running operation for analyzing documents. This class is particularly useful when you need to analyze documents using Azure's Form Recognizer service, which can handle various document types and extract structured data from them.

The AnalyzeResult class in the Azure.AI.FormRecognizer.DocumentAnalysis namespace represents the result of analyzing one or more documents using a trained or prebuilt model. This class is essential for working with the results of document analysis, allowing you to access and manipulate the extracted data programmatically.

Listing 5, shows the code for the InvoiceProcessor function.

using System.IO;

using System.Threading.Tasks;

using Azure.AI.FormRecognizer.DocumentAnalysis;

using Azure;

using Microsoft.AspNetCore.DataProtection.KeyManagement;

using Microsoft.Azure.Functions.Worker;

using Microsoft.Data.SqlClient;

using Microsoft.Extensions.Configuration;

using Microsoft.Extensions.Logging;

using Azure.Storage.Blobs;

namespace Az_Fn_HttpTrigger_FileUpload

{

public class InvoiceProcessor

{

private readonly ILogger<InvoiceProcessor> _logger;

private readonly string _blobConnectionString;

private readonly string _sqlbConnectionString;

private readonly DocumentAnalysisClient _documentAnalysisClient;

private readonly BlobServiceClient _blobServiceClient;

public InvoiceProcessor(ILogger<InvoiceProcessor> logger, IConfiguration configuration, DocumentAnalysisClient documentAnalysisClient, BlobServiceClient blobServiceClient)

{

_logger = logger;

_blobConnectionString = configuration["Values:AzureWebJobsStorage"] ?? throw new ArgumentNullException("AzureWebJobsStorage configuration is missing.");

_documentAnalysisClient = documentAnalysisClient;

_sqlbConnectionString = configuration["Values:DatabaseConnectionString"] ?? throw new ArgumentNullException("AzureSqlConnectionString configuration is missing.");

_blobServiceClient = blobServiceClient;

}

[Function(nameof(InvoiceProcessor))]

public async Task Run([BlobTrigger("invoices/{name}", Connection = "AzureWebJobsStorage")] Stream stream, string name)

{

using var blobStreamReader = new StreamReader(stream);

var content = await blobStreamReader.ReadToEndAsync();

await ProcessInvoiceAsync(stream, name);

_logger.LogInformation($"C# Blob trigger function Processed blob\n Name: {name} \n Data: {content}");

}

/// <summary>

///

/// </summary>

/// <param name="blobStream"></param>

/// <param name="fileName"></param>

/// <returns></returns>

private async Task ProcessInvoiceAsync(Stream blobStream, string name)

{

try

{

// Reset the stream position to the beginning

blobStream.Position = 0;

AnalyzeDocumentOperation operation = await _documentAnalysisClient.AnalyzeDocumentAsync(WaitUntil.Completed, "prebuilt-invoice", blobStream);

AnalyzeResult result = operation.Value;

foreach (var document in result.Documents)

{

string invoiceId = document.Fields["InvoiceId"].Value.AsString();

string vendorName = document.Fields["VendorName"].Value.AsString();

string customerName = document.Fields["CustomerName"].Value.AsString();

DateTime invoiceDate = document.Fields["InvoiceDate"].Value.AsDate().DateTime;

double totalAmount = document.Fields["InvoiceTotal"].Value.AsCurrency().Amount;

var res = SaveToDB(invoiceId, vendorName, customerName, invoiceDate, totalAmount);

if (res)

{

_logger.LogInformation("Invoice saved to database successfully.");

// Save the Blob in the processed container

await SaveBlobToProcessedContainer(blobStream, name);

}

else

{

_logger.LogError("Failed to save invoice to database.");

}

}

}

catch (Exception ex)

{

throw ex;

}

}

/// <summary>

/// Save Invoice to Database

/// </summary>

/// <param name="invoiceId"></param>

/// <param name="vendorName"></param>

/// <param name="customerName"></param>

/// <param name="invoiceDate"></param>

/// <param name="totalAmount"></param>

/// <returns></returns>

private bool SaveToDB(string invoiceId, string vendorName, string customerName, DateTime invoiceDate, double totalAmount)

{

bool isSuccessful = false;

try

{

using var connection = new SqlConnection(_sqlbConnectionString);

string query = "INSERT INTO Invoices (InvoiceId, VendorName, CustomerName, InvoiceDate, TotalAmount) VALUES (@InvoiceId, @VendorName, @CustomerName, @InvoiceDate, @TotalAmount)";

using var command = new SqlCommand(query, connection);

command.Parameters.AddWithValue("@InvoiceId", invoiceId);

command.Parameters.AddWithValue("@VendorName", vendorName);

command.Parameters.AddWithValue("@CustomerName", customerName);

command.Parameters.AddWithValue("@InvoiceDate", invoiceDate);

command.Parameters.AddWithValue("@TotalAmount", totalAmount);

connection.Open();

command.ExecuteNonQuery();

isSuccessful = true;

}

catch (Exception ex)

{

isSuccessful = false;

throw ex;

}

return isSuccessful;

}

/// <summary>

/// Save the Processed Invoice Blob to Processed Container

/// </summary>

/// <param name="blobStream"></param>

/// <param name="blobName"></param>

/// <returns></returns>

private async Task SaveBlobToProcessedContainer(Stream blobStream, string blobName)

{

try

{

var sourceContainerClient = _blobServiceClient.GetBlobContainerClient("invoices");

var destinationContainerClient = _blobServiceClient.GetBlobContainerClient("processed-invoices");

await destinationContainerClient.CreateIfNotExistsAsync();

var sourceBlobClient = sourceContainerClient.GetBlobClient(blobName);

var destinationBlobClient = destinationContainerClient.GetBlobClient(blobName);

await destinationBlobClient.StartCopyFromUriAsync(sourceBlobClient.Uri);

_logger.LogInformation($"Blob {blobName} copied to processed container successfully.");

}

catch (Exception ex)

{

_logger.LogError($"Error saving blob to processed container: {ex.Message}");

throw;

}

}

}

}

Listing 5: The InvoiceProcessor function

The code in Listing 5 has the following specifications:

- The constructor is injected with required dependencies to read keys from local.settings.json file, document analysis, and Blob client to access Azure Blob Storage.

- The Run() method will trigger when the new Blob is uploaded in the invoice container.

- The ProcessInvoiceAsync() method, access the upload blob stream and the blob name. This method uses the AnalyzeDocumentOperation class of which instance is created using the AnalyzeDocuumentAsync() of the DocumentAnalysisClient class. This method accepts the Blob Stream and using the Prebuild model the document from the Blob stream is analyzed. The AnalyzeDocumentOperation class has the value property that returns the AnalyzeResult class. The AnalyzeResult class iterates over the analyzed documents and read the Invoice details. The ProcessInvoiceAsync() method calls the SaveToDB() method.

- The SaveToDB() method accepts the invoice details and save these details in AppDb database and its Invoice table that we have created in Azure SQL.

- The ProcessInvoiceAsync() method calls the SaveBlobToProcessedContainer() method. This method accepts the Blob stream and blob name as input parameters. This method uploads the Processed invoice in the processed-invoices Blob Container.

Run the application, using the Advanced REST client the invoice can be uploaded shown in Figure 14.

Figure 14: Upload the Invoice

The Invoice Document will be uploaded in the invoices container as shown in Figure 15.

Figure 15: Uploaded Invoice Document

`Once the Blob is uploaded, the InvoiceProcessor function will be triggered, and it will process the Blob as shown in Figure 16

Figure 16: The Processing Trigger

Now if you query the Invoice Table, you will see the Invoice record is created in it as shown in Figure 17

Figure 17: The Invoice record

Once the entry is inserted in the table, the processed invoice is stored in processed-invoice container as shown in Figure 18.

Figure 18: Processed invoice

That's it.

The Code for this article can be downloaded from this link.

Conclusion: The Azure AI Document Intelligence is a great service that provides a great scale of flexibility of managing and processing documents. In the domains like Healthcare, Insurance, Load Processing, this service can play a crucial role.