Azure Open AI : Creating a Question-Answer Chat Application using Azure Open AI Services, Azure AI Foundry, and Blazor

In this article, we will implement a Question-Answer Application using Azure OpenAI and Blazor Application. Azure OpenAI is a service provided by Microsoft that allows to access and utilize advanced language models developed by OpenAI such as GPT-4, GPT-3.5-Turbo, and Codes. Some of the key aspects provided by the Azure OpenAI are as follows:

- Advanced Language Models

- There are several powerful models offered by the Azure OpenAI for various natural language processing tasks.

- Custom AI Solutions

- This offers enabling the creation of tailored AI applications for contents generation, summarization, etc.

- Multimodal Capabilities

- The multimodal capabilities allow building diversified applications using the support of text, images, and audio.

- Integration with other Azure Services

- The seamless integration with the other Azure AI services and products provides facility of building comprehensive solution.

- Flexible Deployment

- This provides various deployment options and pricing models that is suitable for various business applications.

- Built-In Security and Compliance

- Offers robust security measures and compliances

- Responsible AI

- The responsible AI practices to ensure the ethical and safe use of AI technologies.

The application that we will be developing in this application will use the GPT-4-32k models deployed using Azure AI Foundry. Figure 1 will provide an idea of the implementation.

Figure 1: The application

The first step is to create Azure AI Service. You must have the Azure Subscription. visit to https://portal.azure.com. Login on the portal using your subscription. Create a resource group on the portal. All the resources must be inside this resource group. On the portal select the Azure AI Services as shown in Figure 2.

Figure 2: Select Azure AI Services

Click on Create link on the Azure AI Services page. On the Create Azure AI services page enter details like Resource Group, Region, Name, Pricing tier, etc. as shown in Figure3.

Figure 3: The Azure AI Service Details

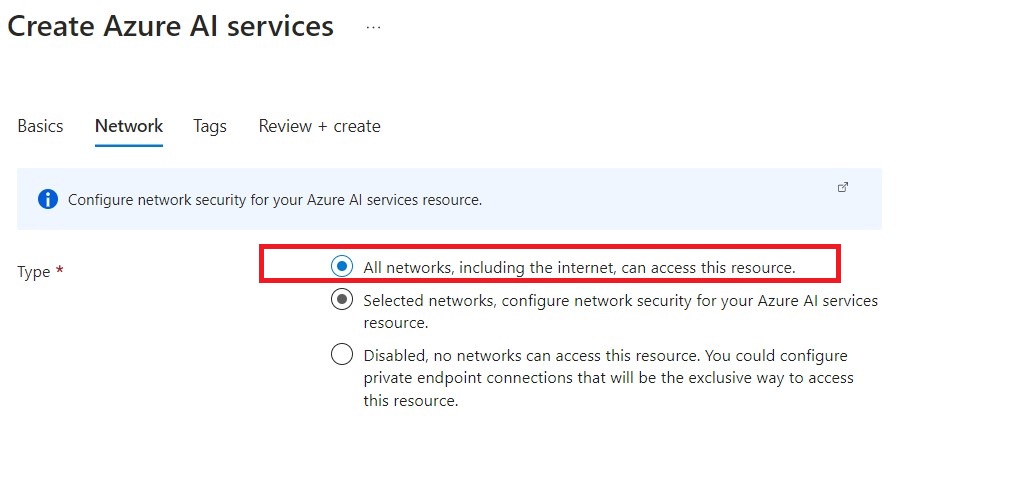

Click on the Next button and select All networks from the Network tab as shown in Figure 4, this is needed so that client application can access the endpoint for the AI Service easily.

Figure 4: The Network Settings

Click on Review + create button, the service will be created. Once the service is created, it will be shown in the portal with keys as shown in Figure 5.

Figure 5: The Service details

We need to create a deployment to access the GPT model. Click on the Go to Azure AI Foundry portal.

What is Azure AI Foundry? (Formally known as Azure AI Studio)

Azure AI Foundry is a comprehensive platform designed to help developers to build, deploy, and manage AI-driven applications. This has following key features:

- Wide Range of Models.

- This has various models (over 1800) including latest models from Azure OpenAI Service and various other providers.

- Unified Platform:

- Teh integration with tools like Visual Studio, GitHub, and Copilot Studio that offers a seamless environment for AI development.

- Scalability and Customization:

- This offers model customization so that they can fit as per the application requirements. This helps to scale the application to satisfy growing demands.

- Responsible AI:

- Azure AI Foundry has built-in controls and APIs that help to mitigate risks and ensure compliance with global data protection and regulations.

- Collaboration and Management:

- Projects in Azure AI Foundry facilitate collaboration, organization, and connection to data and services.

Figure 6: The Azure Foundry

Here we can create new Deployment. Click on the Deploy model button that will show options for deployment as shown in Figure 7.

Figure 7: The Deployment options

Select Deploy base model this will open a dialog box where you can select model name e.g. gpt-4-32k and click on Confirm button as shown in Figure 8.

Figure 8: The Deployment model

Here we have selected GRP-4-32k model. This is an extended version of GPT-4- model that is designed to handle significantly larger context length. Thie model can process up to 32768 tokens in a single pass that is nearly 40 pages of text. This model is ideal for tasks involving long documents for analyzing lengthy reports or interacting with extensive PDF documents. Once the Confirm button is clicked the Deployment dialog box will be shown where we can enter details like Deployment Name, we can select Deployment Type, Model Version, Token per Minute Rate Limit, Content Filter, etc. as shown in Figure 8.

Figure 9: Deployment Details

The Standard deployment type offers following features:

- Pay-per-Call Billing means we only pay for the API calls those are made. This provides cost-effective options for low to medium volume workloads with high burstiness.

- Global Infrastructure, this utilizes the Global Infrastructure of Azure that dynamically routes traffic to the best available data center that ensure high availability and low latency.

- Scalability, this is ideal for getting started quickly and scaling as needed, through high consistent volume may experience greater latency variability.

- Guaranteed Throughput

- Provisioned Throughput Unit (PTU)

- Cost Efficiency

- Global and Data Zone Options

Once the deployment is completed, we need to copy the Endpoint and Key so that the client application can access the endpoint. The details for Endpoint and Key can be seen as shown in Figure 10.

Figure 10: The Deployment Details

Once the Model Deployment is successfully completed, we can use it in the client application.

The deployment can be tested using either Postman or AdvancedRESTClient (ARC) as shown in figure 11

Figure 11: The ARC testing of the Deployment

The request body for the HTTP POST method must be in the form as shown in Figure 12

Figure12: The HTTP POST request

The response is in the format as shown in Figure 13.

Figure 13: The response

The response returns the choices object that has message object where the response is added in the content property.

Step 1: Open Visual Studio 2022 and create a new Blazor Web App and name it as Blazor_OpenAI. In this project modify the appsettings.json file and add new keys for Deployment Endpoint and API Key s shown in Listing 1.

.......

"Deployments": {

"Endpoint": "[DEPLOYMENT-ENDPOINT],

"APIKey": "[API-KEY]",

}

.......

Listing 1: The appsettings.json with endpoint and keys

Step 2: To collect the response from the HTTP request made to the deployment endpoint we need to add classes in the Blazor application. n the application add a new folder named Models. In this folder add a new class file named QnAInfrastructure.cs. In this class file we will add code for Message, Choice, and AnswerResponse class. The code is shown in Listing 2.

namespace Blazor_OpenAI.Models

{

public class AnswerResponse

{

public Choice[]? choices { get; set; }

}

public class Choice

{

public Message? message { get; set; }

}

public class Message

{

public string? content { get; set; }

}

}

Listing 2: The Classes to collect response

Step 3: In the project, add a new folder named Services. In this folder add a new class file name QnAServiceClient.cs. In this class file we will add code for QnAServiceClient class. In this class we will use the HttpClient class to send HTTP POST request to the Deployment. In this class we will have the GetMyAnswerAsync() method. This method reads the Endpoint and API key from the appsettings.json file. We need to pass the API Key in the HTTP header with the header key as api-key. The body of this message will pass the question in the message, max token as 100 and model as gpt-4-32k. Once the response is received it will be deserialized in the AnswerResponse class. The code for the class is shown in Listing 3.

using Blazor_OpenAI.Models;

using System.Text;

using System.Text.Json;

namespace Blazor_OpenAI.Services

{

/// <summary>

/// Create a service that will handle the interaction with Azure OpenAI.

/// </summary>

public class QnAServiceClient

{

private readonly HttpClient _httpClient = new HttpClient();

private readonly IConfiguration _configuration;

public QnAServiceClient(IConfiguration configuration)

{

_configuration = configuration ?? throw new ArgumentNullException(nameof(configuration));

}

public async Task<string?> GetMyAnswersAsync(string question)

{

string? answer = null;

try

{

// 1. Make the HTTP POST Request to Authorize the Client

string? endpoint = _configuration["Deployments:Endpoint"];

if (string.IsNullOrEmpty(endpoint))

{

throw new InvalidOperationException("Endpoint configuration is missing or empty.");

}

var request = new HttpRequestMessage(HttpMethod.Post, endpoint);

// 2. Add the Authorization header

string? apiKey = _configuration["Deployments:APIKey"];

if (string.IsNullOrEmpty(apiKey))

{

throw new InvalidOperationException("API Key is missing or empty.");

}

request.Headers.Add("api-key", apiKey);

// 3. Define a Request body, here the prompt will be the question and the max tokens will be 100

var body = new

{

messages = new[]

{

new { role = "user", content = question }

},

max_tokens = 100,

model = "gpt-4-32k" // Ensure this matches the model name supported by the API

};

// 4. Define the Request Contents

request.Content = new StringContent(

JsonSerializer.Serialize(body),

Encoding.UTF8, "application/json"

);

// 5. Make the HTTP Request

var response = await _httpClient.SendAsync(request);

// 6. Check for Bad Request and log the error message

if (response.StatusCode == System.Net.HttpStatusCode.BadRequest)

{

var errorResponse = await response.Content.ReadAsStringAsync();

throw new InvalidOperationException($"Bad Request: {errorResponse}");

}

response.EnsureSuccessStatusCode();

// 7. Read the Response

var responseBody = await response.Content.ReadAsStringAsync();

// 8. Deserialize the response

var responseAnswer = JsonSerializer.Deserialize<AnswerResponse>(responseBody);

// 9. Process the Response

if (responseAnswer?.choices != null && responseAnswer.choices.Length > 0 && responseAnswer.choices[0].message?.content != null)

{

answer = responseAnswer.choices[0]?.message?.content;

}

else

{

throw new InvalidOperationException("The response does not contain a valid answer.");

}

}

catch (Exception ex)

{

answer = $"There is an error occurred while processing your request: {ex.Message}";

}

return answer;

}

}

}

Listing 3: The code for QnAServiceClient class

Step 4: Let's modify the Program.cs for registering QnAServiceClient class in dependency container. We also need to add the Interactive Server Render Mode so that button click events on Blazor components can be executed. Listing 4 shows the code for Program.cs.

...................

// Register the QnAServiceClient

builder.Services.AddSingleton<QnAServiceClient>();

var app = builder.Build();

app.MapRazorComponents<App>()

.AddInteractiveServerRenderMode();

......................

Listing 4: The Program.cs code

Step 5: In the Pages subfolder of the Components folder of the application add a new Razor component named QnA.razor. In this component we will inject QnAServiceClient class. We will also set the rendermode as InteractiveServer for firing click event of buttons. Listing 5 shows the code for the component.

@page "/qna"

@rendermode InteractiveServer

@using Blazor_OpenAI.Services

@inject QnAServiceClient qnaService

<h3>Ask Me Your Question</h3>

<div class="container alert alert-warning">

<label for="question">Enter your question:</label>

<InputText @bind-Value="question" class="form-control"/>

<br/>

<br/>

@if (!string.IsNullOrEmpty(answer))

{

<h6>Your Answer</h6>

<div class="container alert alert-light overflow-auto">

<pre class="brush:csharp;brush:javascript">

@answer

</pre>

</div>

}

<div class="btn-group-lg alert-warning">

<button class="btn btn-danger" @onclick="@clear">Clear</button>

<button class="btn btn-success" @onclick="@getAnswer">Get Answer</button>

</div>

</div>

@code {

private string question = string.Empty;

private string answer = string.Empty;

private void clear()

{

question = string.Empty;

answer = string.Empty;

}

private async Task getAnswer()

{

answer = await qnaService.GetMyAnswersAsync(question);

}

}

Listing 5: The Component code

In this component we will enter out question in the InputTextBox. The Ger Answer button is bound with the getAnswer() method. This method has the string input parameter that represents the question to ask. Based on this question the OpenAI Service will return the answer.

Step 6: Modify the NavMenu.razor by adding the navigation link for the QnA component as shown in Listing 6.

<div class="nav-item px-3">

<NavLink class="nav-link" href="qna">

<span class="bi bi-list-nested-nav-menu" aria-hidden="true"></span> Question and Answer

</NavLink>

</div>

Listing 6: The Navigation Link

Run the application and navigate to the QnA Component. Enter the question in the TextBox and click on the Get Answer button. The result will be displayed as shown in Figure 13.

Figure 13: The output

The code for this article can be downloaded from this link.